Introduction

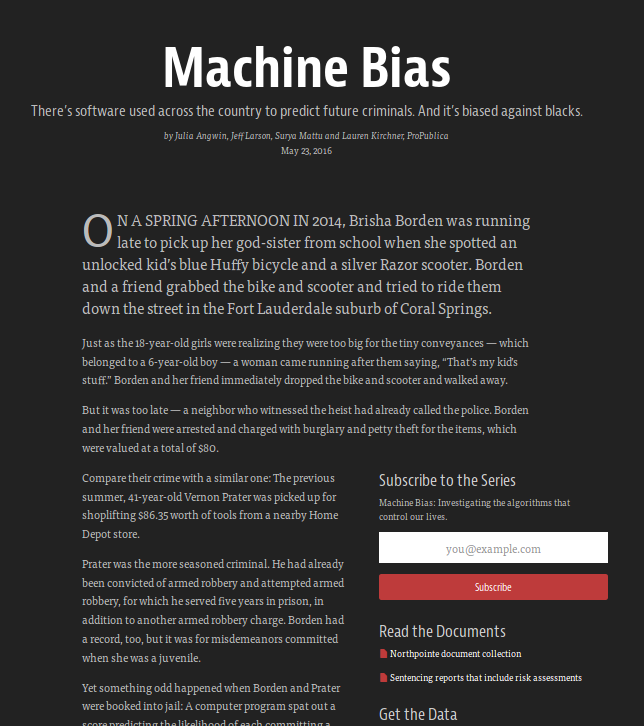

In many industries, ranging from criminal justice to medicine, algorithms perform the risk assesment to decide the consequences of decisions and actions. As the scope of such algorithms increases, journalists and academics have voiced concerns that these models might be encoding human biases, as they are built on data that reflect human biases in past decisions. And outcomes.

In many industries, ranging from criminal justice to medicine, algorithms perform the risk assesment to decide the consequences of decisions and actions. As the scope of such algorithms increases, journalists and academics have voiced concerns that these models might be encoding human biases, as they are built on data that reflect human biases in past decisions. And outcomes.

Over the last couple of years, the research community has proposed formal and mathematical definitions of fairness to guide the conversation and design around equitable risk assesment tools. These definitions are intuitive, but each of these definitions of fairness has its own limitations, and are not the best measures of detecting discriminatory algorithms. In fact, designing algorithms that satisfy these definitions of fairness can end up negatively impacting minority (as well as majority) community well-being.

What is Fairness?

Fairness, as defined by Barocas and Hardt, is an unjustified basis for differentiation. Historically, this basis has had practical irrelevance in relation to the task being performed.

Fairness, as defined by Barocas and Hardt, is an unjustified basis for differentiation. Historically, this basis has had practical irrelevance in relation to the task being performed.

Think gender, race, or sexual preference.

Even if statistically relevant, there are certain factors considered morally irrelevant, as a decision that we consciously take and choose not to differentiate on the basis of.

Think of physical disabilities, or age.

There are two doctrines of discrimination law:

- Disparate Treatment: This is the idea that if your model even considers certain factors (whether or not they are used in your model) then it is illegal. Sometimes, these factors can be a matter of formality, like asking for age and gender on any form. Disparate treatment also occurs when proxies are used for these factors, like redlining districts where African Americans are in majority.

- Disparate Impact: This doctrine avoids using any factors that can cause discrimination - instead, it focuses on facially neutral attributes. However, disparity still manages to appear in the output. This leads to questions like is the process justified, and is systemic bias even avoidable?

As you can see, fairness is intrinsically tied with discrimination, which is not a general concept - it often depends on context and a domain. However, it often concerns important decisions that affect life situations.